The use of AI in content generation is becoming more common across the internet. As an agency that both creates and helps others develop content, we were curious to see if using AI would impact content performance. On one hand, tools like ChatGPT are incredibly effective at producing clear, concise, and well-organized content. On the other, we wondered if the absence of a human writer would negatively affect how users engage with our content, as well as how Google would choose to rank it.

To put AI content to the test in a way that played to its strength in delivering straightforward, informational content, we decided to create a glossary. This glossary included 89 marketing-related terms we felt would add valuable knowledge to our website. We split the work between AI-generated content and human-written content.

Though we were aware of the growing use of AI in content creation, we were still skeptical. This project began a year ago, and even though Google had stated they would “reward high-quality content, however it is produced,” we wanted to see the results for ourselves.

A year later, with our glossary fully published and six months of performance data at hand, we’re ready to analyze the results. Did Google favor the human-written pages, or did they stay true to their word, rewarding quality content regardless of how it was produced?

The glossary we created

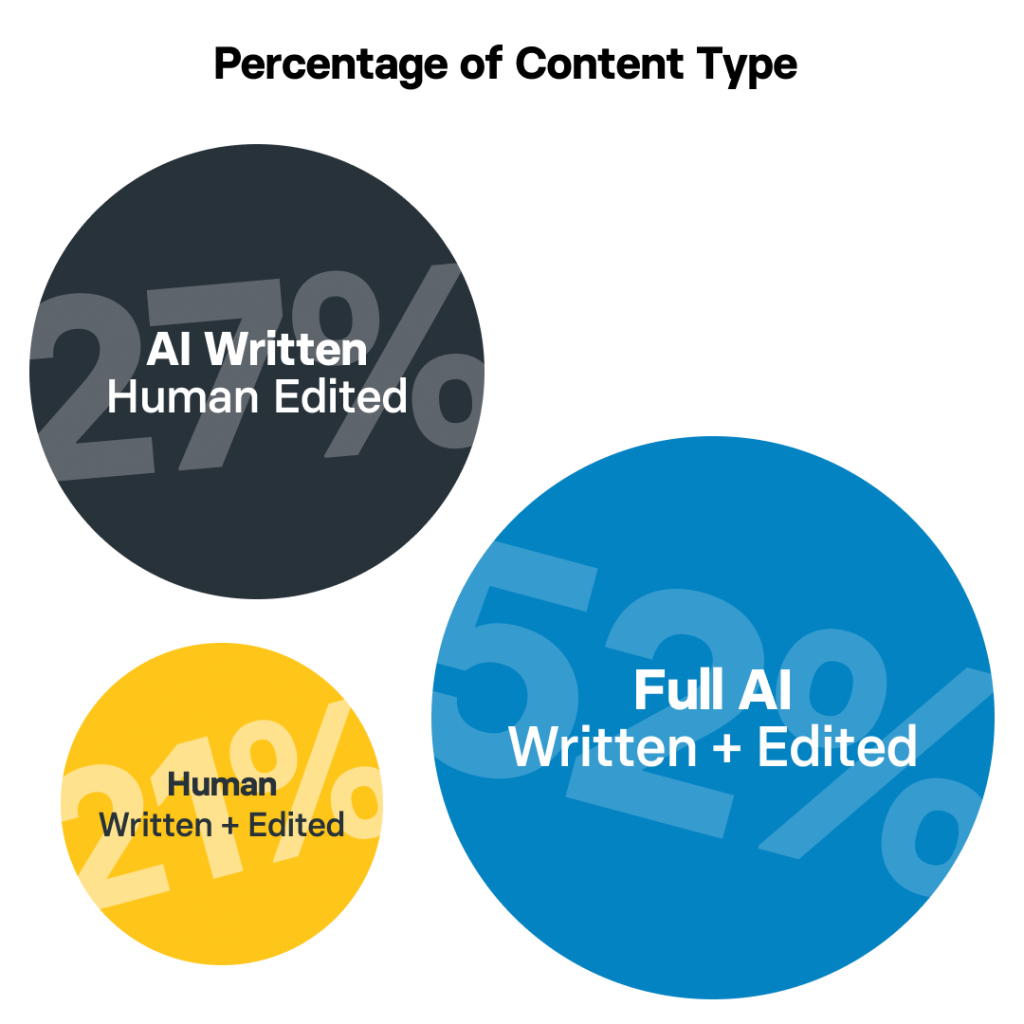

We created a list of 89 marketing-related terms that we felt would be valuable additions to our website’s glossary. As discussions around AI content continued to evolve, we decided to categorize the content in three ways to better understand if the origin of the writing—whether fully human, AI-assisted, or entirely AI-generated—would impact performance.

Our approach involved creating three types of glossary entries:

Fully Human-Written Posts: These entries were written entirely by a human without any AI assistance.

AI-Generated with Human Editing: In this category, AI-generated the initial draft, and a human editor refined the content for quality, clarity, and accuracy.

Fully AI-Generated Posts: These entries were produced solely by AI, with minimal human involvement beyond formatting and linking.

In the end, the distribution across the types was as follows:

- 24 (27%) AI-Generated with Human Editing: Drafted by AI and then edited by a human for quality.

- 46 (52%) Fully AI-Generated: Created solely by AI, with human intervention limited to formatting and link placement.

- 19 (21%) Fully Human-Written: Crafted entirely by a human writer without AI input.

The uneven number of each type was due to changes in our approach as Google released new guidance indicating that the presence of AI in content would not affect ranking; only content quality would matter. This announcement led us to shift more content toward AI generation to evaluate performance under Google’s stated standards.

Analyzing Performance Metrics

To assess how each type of content performed, we collected six months of data on four key metrics:

- Clicks: The number of times users clicked on a glossary entry from search results.

- Impressions: The total number of times a glossary entry appeared in search results.

- Click-Through Rate (CTR): The percentage of impressions that led to clicks, offering insight into how enticing the search snippet was.

- Average Position: The average rank of each glossary post in search results.

Upon analysis, we found that 57 of the 89 glossary entries had received no clicks in the past six months. While this might initially seem discouraging, it’s important to consider that content success can be influenced by many factors, including:

- Search Intent: How well the content matches what users are seeking.

- Keyword Difficulty: Competition for keywords can make it harder for new pages to rank.

- Search Volume: Terms with low search volume naturally see fewer impressions and clicks.

Setting the stage for results

With six months of performance data now in hand, we have a clearer view of how each type of glossary entry is performing across these core metrics. While many factors can influence content visibility and engagement—such as search intent alignment, keyword difficulty, and search volume—our primary focus remains understanding whether human-written content consistently outperforms AI-generated content, or if AI can indeed compete on equal footing.

In the next section, we’ll dive into the results, comparing clicks, impressions, CTR, and average position for each type of glossary post to see if our experiment revealed any significant differences in performance based on content origin.

Results

Clicks:

Of the posts that received clicks, 16 were fully AI-generated, accounting for 35% of the AI-generated posts. For fully human-written content, 11 posts received clicks, making up 58% of this category. Meanwhile, 6 posts in the AI-generated with human editing category received clicks, representing 25% of that group.

For posts that received no clicks, the breakdown was as follows: 30 of the fully AI-generated posts (65%) did not receive clicks. In the AI-generated with human editing category, 18 posts, or 75%, had no clicks. Among fully human-written posts, 8 posts (42%) received no clicks.

Although a higher percentage of the human-written posts received clicks, the top-performing posts by clicks all incorporated AI in some way. This finding suggests that AI-generated content, particularly when enhanced with human editing, can compete effectively and even outperform fully human-written content in terms of engagement.

The top-performing glossary posts by clicks consist of different types, with 5 fully AI-generated posts, 3 human-written posts, and 2 AI-generated with human editing. This distribution illustrates engagement levels across various content creation methods.

Top 10 Glossary Posts by Clicks

- Follower Count – AI – Human Edited – 329 clicks

- Social Media Algorithm – Full AI – 321 clicks

- H1-H4 Header Tags – Full AI – 141 clicks

- Black Hat SEO – Full AI – 60 clicks

- Website Authority – Full AI – 26 clicks

- Holistic SEO – No AI Used – 18 clicks

- Blog – No AI Used – 15 clicks

- Direct Message – No AI Used – 12 clicks

- Bots – AI – Human Edited – 9 clicks

- Viral Content – AI – Human Edited – 6 clicks

Impressions:

Among the top 10 glossary posts by impressions, we have a mix of content types, with 4 posts fully AI-generated, 4 posts with no AI used, and 2 posts AI-generated with human editing. This breakdown demonstrates that a variety of content creation approaches can reach high visibility, with fully AI-generated and fully human-written posts performing particularly well.

Top 10 Glossary Posts by Impressions

- Search Engine Marketing – No AI Used – 66,840 impressions

- Black Hat SEO – Full AI – 51,946 impressions

- White Hat SEO – No AI Used – 36,951 impressions

- H1-H4 Header Tags – Full AI – 35,726 impressions

- Follower Count – AI – Human Edited – 35,700 impressions

- Social Media Algorithm – Full AI – 31,913 impressions

- Website Authority – Full AI – 29,313 impressions

- Keyword Stuffing – No AI Used – 27,540 impressions

- User-Generated Content – Full AI – 22,344 impressions

- Site Structure – AI – Human Edited – 17,468 impressions

Average Position

The top glossary posts by average position include a mix of content types, with 6 fully AI-generated posts and 4 AI-generated with human editing. This distribution shows various average ranking positions across content created primarily by AI.

Top 10 Glossary Posts by Average Position

- Social Media Analytics – AI – Human Edited – Position 4.8

- Meta Description – Full AI – Position 5.92

- Structured Data – Full AI – Position 6

- Relevancy – Full AI – Position 7

- Keyword Research – Full AI – Position 7.29

- Conversion – AI – Human Edited – Position 8.71

- CMS – AI – Human Edited – Position 9.33

- Social Media Automation – AI – Human Edited – Position 9.79

- Broken Links – Full AI – Position 10.75

- Backlinks – Full AI – Position 11.24

CTR

The top-performing glossary posts by click-through rate (CTR) include a variety of content types: 5 fully AI-generated posts, 4 human-written posts, and 1 AI-generated with human editing. This distribution reflects a range of engagement levels across different content creation methods.

Top 10 Glossary Posts by CTR

- Blog – No AI Used – 2.09% CTR

- Social Media Algorithm – Full AI – 1.01% CTR

- Social Listening – Full AI – 1.01% CTR

- Follower Count – AI – Human Edited – 0.92% CTR

- Influencer Marketing – No AI Used – 0.49% CTR

- H1-H4 Header Tags – Full AI – 0.39% CTR

- Guest Posting – Full AI – 0.19% CTR

- Holistic SEO – No AI Used – 0.18% CTR

- Google Ads – Full AI – 0.17% CTR

- Direct Message – No AI Used – 0.16% CTR

CTR by Content Type

- AI – Human Edited: 0.39% CTR

- Full AI: 0.23% CTR

- No AI Used:0.04% CTR

Key Takeaways

The data suggests that AI-generated content, whether edited or fully automated, has a strong potential to drive engagement compared to entirely human-written posts. However, the top performer, a fully human-created blog with a CTR of 2.09%, indicates that well-crafted, human-written content can still lead in specific contexts. AI-driven content creation, especially when paired with human editing, is a valuable strategy for scaling high-quality, engaging content.

Summary:

Overall, no content type significantly outperformed the others enough to suggest a clear advantage. Having a larger number of AI-generated glossary posts did provide more opportunities to rank, but ultimately, the metrics were mostly balanced, with each content type performing well in its own way. One standout observation is that the top 5 glossary posts by clicks all used AI to a significant extent. This may be influenced by search volume and keyword difficulty, yet it’s clear that Google did not appear to limit impressions or clicks for AI-generated content.

Based on this data, we conclude that, as of now, Google is honoring its statement to “reward high-quality content, however it is produced.”

What this means for using AI in content creation

While the performance of these posts varied, this shouldn’t be taken as a blanket endorsement to generate and publish large amounts of AI-created content. Content, regardless of how it’s produced, must still be high quality. Both AI and humans can produce low-quality content if not approached thoughtfully. For content to rank well, it should meet quality standards by aligning with audience needs, understanding keyword intent, developing a strategic approach to keywords and topics, and ultimately involving a human reviewer who understands these elements.

AI is a powerful tool that can help companies with limited resources produce content efficiently, but it should be used with intentionality and oversight to ensure that the content meets quality standards.

Conclusion

Our experiment set out to answer a key question: does using AI in content creation affect Google rankings? After a year of gathering data and tracking performance across multiple metrics—clicks, impressions, CTR, and average position—we observed that Google’s approach is indeed in line with its public statements. High-quality content performed well, regardless of whether it was produced by AI, humans, or a mix of both.

Our data shows that each content type had strengths, but none outshined the others to a definitive degree. AI-generated content, particularly when enhanced with human editing, performed strongly in areas like click volume, while human-written entries had high click-through rates. Google’s algorithms didn’t penalize AI-generated posts; instead, they rewarded content that met search intent and quality benchmarks. In a world where AI content is increasingly common, our findings highlight the importance of balancing efficiency with thoughtful content development—combining the speed of AI with human expertise to deliver meaningful, high-quality content that resonates with both users and search engines.