- While the reliability of AI content detectors is questionable, it was generally correct about short-form content.

- AI content detectors use perplexity and burstiness as key metrics to understand if your content was written by a human.

- Google’s approach favors the quality of content over its origin, and it does not currently penalize AI-generated content.

- The ultimate focus should be on the quality and usefulness of content.

As AI and AI-assisted content continues to be published across the internet, you are bound to come across a piece of content where you’ll say, “This is definitely written by AI.” As the popularity of AI content increases and language learning models (LLMs) get better at writing content, it is going to be harder and harder to tell the difference between AI and human content. Here at Redefine, we look at, review, and edit a lot of written content. As the use of these tools continues to grow, we are sometimes asking ourselves, was this content written by an AI?

We started wondering if it was possible to tell. As AI content floods our feeds, will we be able to tell the difference between AI and human content? Can we ever be 100% sure? So, with our own pieces of content, we put the top three AI content detectors to the test to see how well they could actually find human content.

Detecting AI may be impossible?

Before testing these content detectors, we did research to see what other people had found. The overwhelming answer was that with minimal effort, most generations could avoid AI detection tools. In a well-organized study from the University of Maryland, it was found that “a paraphrasing attack, where a lightweight neural network-based paraphraser is applied to the output text of the AI-generative model, can evade various types of detectors.” Mainly, by using a paraphraser, one could easily bypass even the most effective AI detectors. Overall, the study concluded that “these detectors are not reliable in practical scenarios.”

The issue of AI content has mainly been discussed in regards to teachers being able to accurately tell if a student used AI on homework or not. The Washington Post concluded that “Detecting AI may be impossible,” and New Scientist reported that “detectors to spot AI content will only be as accurate as flipping a coin.” Here are Redefine, we weren’t worried about having to grade student papers, but we were curious about the implications of AI content on the internet and if we would have an issue knowing if the content that we reviewed was written by a human or not.

Let’s put it to the test

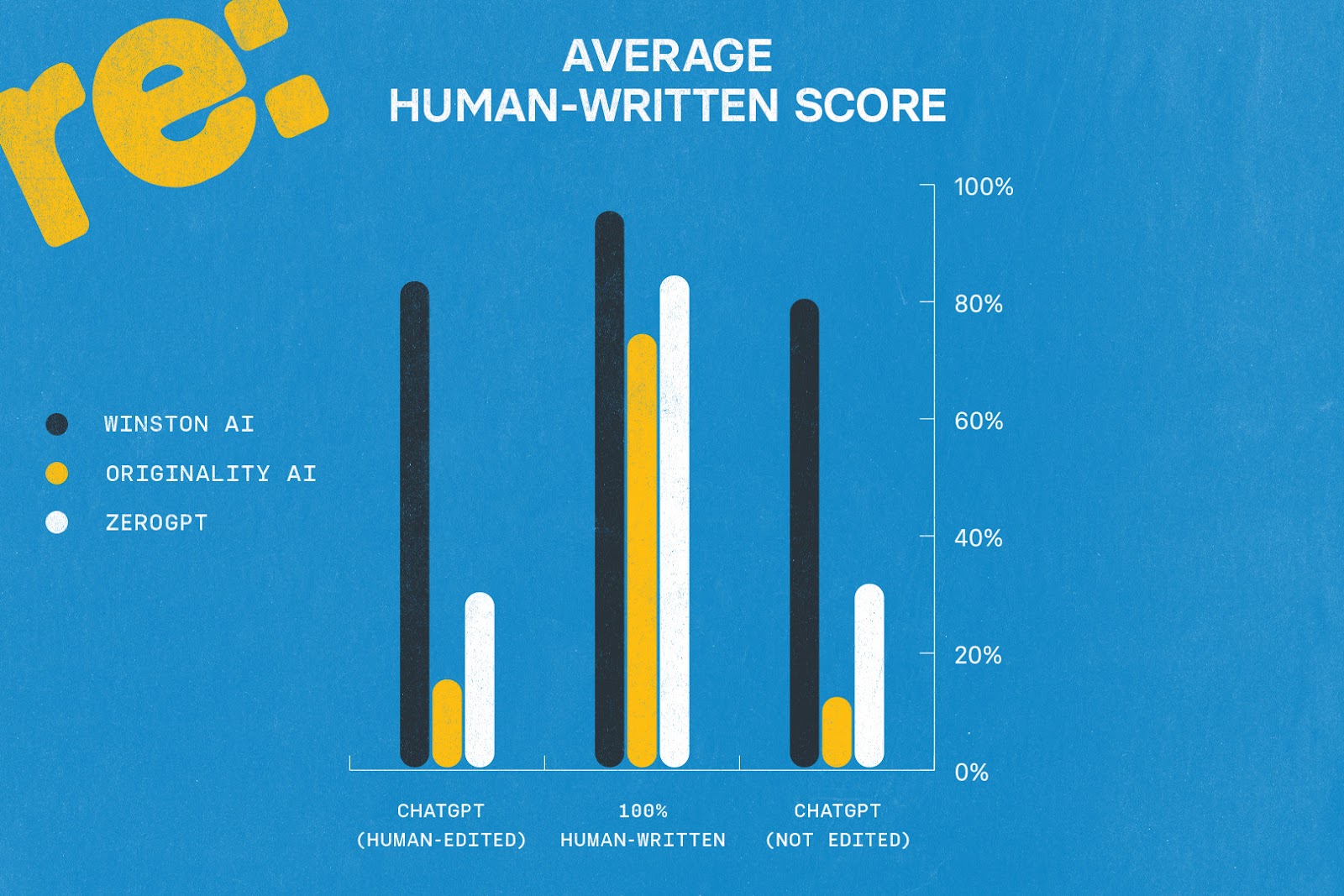

So, we put together a small test in order to see if AI used in a short blog format could, in fact, evade the top three AI detectors. We tested five texts from three different categories: Fully Human Content, ChatGPT 4 written content that was edited by a human, and fully ChatGPT 4 written content. Each of the texts was a 500-word blog meant to give the reader a definition of an online marketing term (like these).

We tested each piece of content using Winston.ai, Originality AI, and GPT Zero. The results were very interesting, considering the research beforehand; in the chart below, you can see how each detector did. The percentage shows how “human” the detector thought the content was. For example, on average, Winston AI thought that text written fully by ChatGPT was 80% human, while on average, Originality AI graded those same pieces of content as 12% human.

Results:

We were happy to see here that, overall, the human-written content did score much better than AI and AI-assisted content. However, there are a few things to note here from our test. All of this content was 450-550 words. The longer the piece of text, the harder it is for AI to detect; in order to keep the content “high quality,” we avoided using any AI paraphrasing or rewording tools as they tended to decrease the readability of the content.

Interesting conclusions:

- These detectors are not 100% accurate

- Many fully AI texts were given scores of 70% and above

- Some fully human content was given a score of 50% or below.

- On average, in this test, the human written content scored much higher.

How do AI detectors work?

Does this mean that all these other studies were wrong and that we can fully trust AI content detectors? Not quite. Basically, AI content detectors work by running their own algorithm or LLM against the content that you give them. They are using a process similar to that of the AI tools that are writing the content in the first place. The two factors that AI content detectors are looking for are perplexity and burstiness.

Perplexity: refers to the degree of unpredictability in a text. Texts generated by AI typically exhibit lower perplexity due to their predictable nature, in contrast to the higher perplexity found in human-authored texts.

Burstiness: describes the fluctuations in sentence length and structure. Content produced by AI usually demonstrates less variability or lower burstiness compared to the more dynamic nature of human writing.

Detectors use these two factors, comparing them to their own algorithm in order to determine a “Human Score” for your content.

What does Google think?

Is Google using detection methods to punish AI content? The short answer is no. As AI content starts to flood the internet, Google wants to be in on the action. They are developing their own AI (Bard) for content writing, and they are using AI to combine search results for better featured snippets. Google has even said that they have “long believed in the power of AI to transform the ability to deliver helpful information.” They have also said that they are “Rewarding high-quality content, however it is produced.”

This goes to show that, at the end of the day, the quality of the content you produce is the most important thing. Does the content you’re writing solve a problem? Does it give the reader high-quality, useful information? Would using AI stop that content from being the best it could be? These are questions to ask when deciding whether or not to use AI.

Conclusion

In our short test, we were able to find the content written by AI. However, as time goes on, it might become more and more difficult to detect AI-written content. When used lazily, AI content can be predictable and repetitive. As content writers, we need to ask ourselves, does using AI take away from how effective our content is? The perplexity and burstiness of a human can not always be replicated well by an AI, and sometimes, that’s the charm of a piece of content.

We can not fully rely on AI content detectors to tell us what is human or not, but we can rely on the fact that writing high-quality content comes first. Depending on how you use it, AI can either be a tool that pushes your content forward or hinders it from truly helping people. Concerned that your content isn’t up to Google’s standards? Our team can help – connect with us today to learn more.